GenAI as the ultimate platform, or a dystopian scenario for media

Here’s an interesting thing to think about: what happens if generative AI platforms become the main interface for humans to access information? And more narrowly, what would it mean for media and journalism?

I already mentioned the essay by Bloomberg News’ editor-in-chief John Micklethwait on AI and journalism the other day. Here’s a passage from the piece:

“[…] reporting will still have enormous value. […] you need reporting. An AI summary is only as good as the story it is based on. And getting the stories is where the humans still matter.”

I wholeheartedly agree with this notion, there’s really no way around it. However, I’m not sure it necessarily means that the future of media and journalism as we know them now is secured.

Here’s the thing. If you look at a general-purpose LLM platform from its developer’s standpoint, you can say that the website of any media publication whose data is being ingested into the LLM is just an intermediary step between the user and the information they want to access (provided by a reporter in most cases). So, why not cut out the middleman by getting journalists to contribute straight into the model?

In fact, this process seems to have already started. I’m aware of multiple companies, in Europe and elsewhere, that are hiring specialist writers — including journalists — to write copy that will never see the light of day. Its sole purpose is to be ‘training fodder’ for a proprietary LLM.

Dystopian as it may sound, I really don’t see a lot of barriers for that to happen, outside of the technical limitations related to hallucinations and incremental training of LLMs (both of which could be solved at least partially with a combination RAG and fine-tuning, as far as I understand). Of course AI slop spat out by the model will remain just that — but the concept could become quite attractive if implemented at scale; it can also be a way for the platform to differentiate on the market.

Imagine an LLM company that both scrapes existing media outlets’ websites aggressively (including through partnership agreements like the ones between OpenAI and Financial Times or Mistral AI and AFP) and employs its own reporters to feed the model. It could have human-curated and AI-generated “front page,” and build all sorts of digests, listicles, and news overviews for each reader individually. I wouldn’t be surprised if enough people from less picky audience segments were happy to flock to such publications.

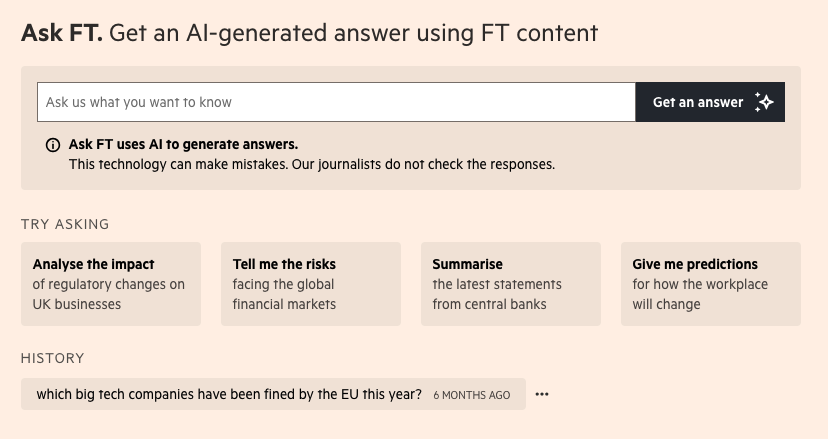

The logical next step from there would be for media outlets to try and protect themselves from becoming eaten alive by AI companies. Firstly, they’d likely need to train their own models on their own data and offer them through a chat interface to stay competitive. And once again, this seems to be happening already — for example, Financial Times has ask.ft.com, which does exactly that.

Secondly, traditional media outlets would need to protect their original reporting from being ingested in competing models. And as long as LLMs can’t be successfully trained on data generated by other LLMs, the best way to do that would be to never publish said reporting in the first place — which, again, leaves us with GenAI as the ‘ultimate platform’ for information access.

I wouldn’t go as far as calling this a prediction, but this scenario sounds much less impossible than it would’ve just a couple of years ago. And that’s properly terrifying.

Since there’s no comment section here for the time being, feel free to join the conversation on LinkedIn.